Model Evaluations¶

Model evaluation in Falkonry is essential for assessing how well a model fits historical data and how accurately it may perform in the future. It involves applying the model to a target dataset and analyzing the output using various metrics and visualizations. Evaluations are generated automatically when a model is run over any time period and can be accessed via the Evaluations tab within an assessment.

How to Perform a Model Evaluation¶

To evaluate a model in Falkonry:

-

Go to the Model Manager page within your assessment.

-

Select the model to evaluate.

-

Click Evaluate from the model’s action menu.

-

In the Evaluate Model dialog, define:

- The evaluation time range(s)

- The event groups (ground truths) to compare against

-

Track evaluation progress from the Activity list (bell icon).

Understanding Evaluation Results¶

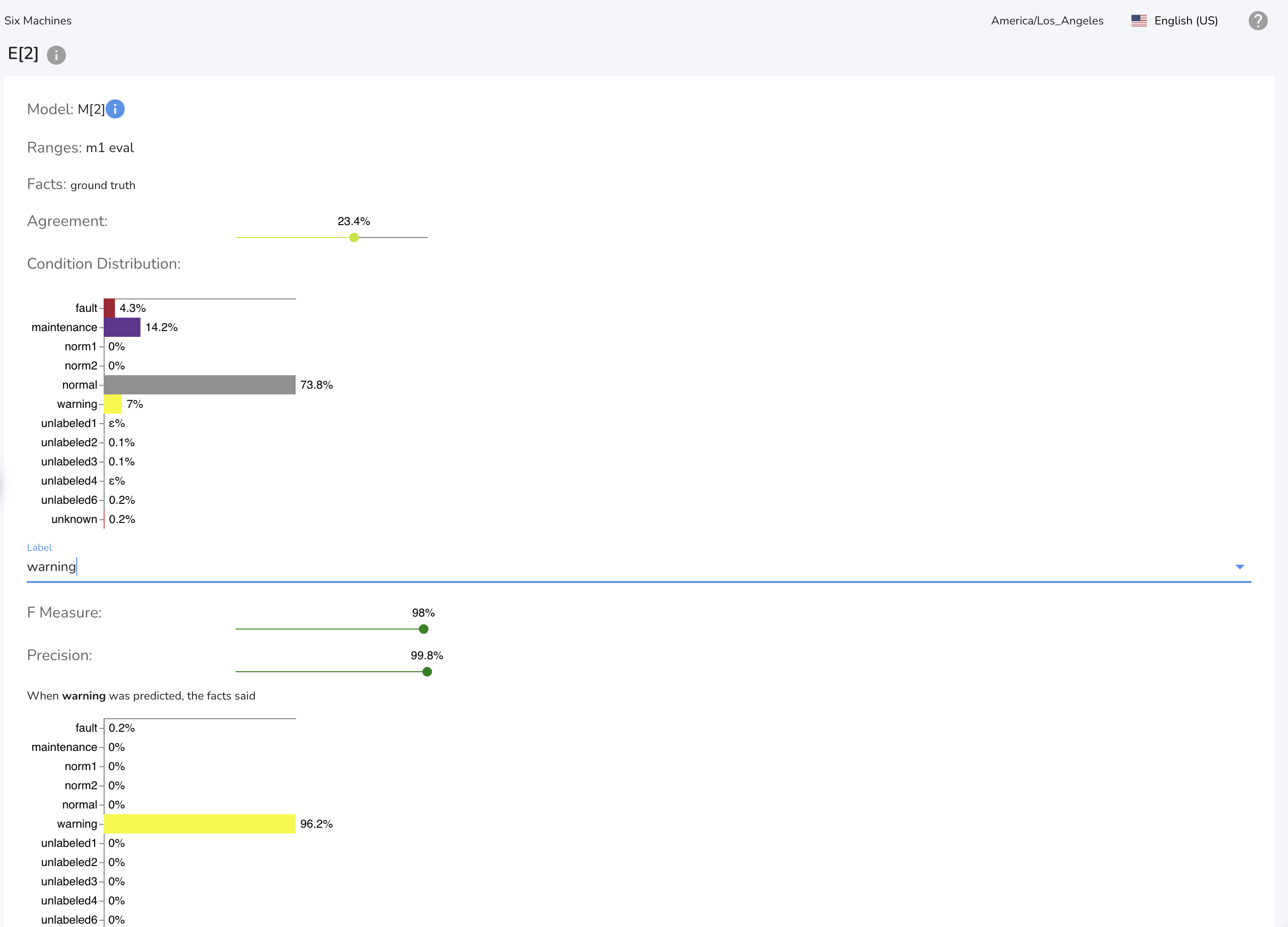

The evaluation results depend on model type (supervised vs. unsupervised) and the presence of ground truth data. Key metrics and visualizations include:

- Model Condition Distribution: Displays the frequency of each condition during the evaluation period. For unsupervised models, 5–8 distinct conditions are ideal, depending on use case.

- Evaluation Tools:

- Explanation Score: Range from -1.0 to +1.0, showing each signal’s influence on prediction. Positive values indicate strong correlation with the outcome; negative values indicate correlation with other outcomes.

Best Practices for Model Evaluation¶

- Make it Iterative: Review and refine models continuously based on evaluations.

- Compare Iterations: Use comparison charts to assess model performance over time.

- Segment Long Ranges: For periods >6 months, evaluate in 1–2 month chunks for faster results.

- Add Descriptive Labels: Clearly describe each evaluation with its time range for easy navigation.

- Use Precise Ground Truth:

- Ground truth is vital for validation.

- Include start/end times, description, impact, issue type, equipment ID, and relevant signals.

- Work with Falkonry tools like reports for output comparison and validation reporting.

- Manage False Positives: Use ground truth and condition distribution to refine alert conditions.

- Handle Low Accuracy Labels:

- Add more training data or features.

- Adjust model parameters.

- Reassess label quality or distinctiveness.

- Validate Before Deployment: Ensure your model is validated for key failure/warning modes, and share validation reports with end-users.